New

“Made with Metabase” community contest: share a project for a chance to win a limited‑edition mechanical keyboard ⌨️

Learn more

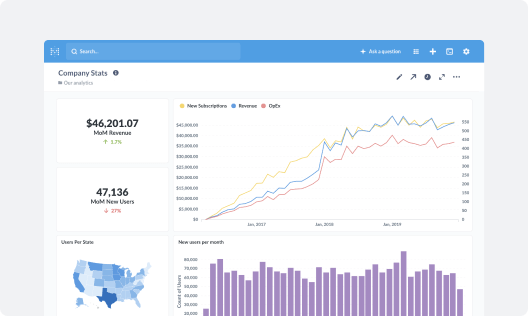

Business Intelligence

Self-service analytics for your team

Embedded Analytics

Fast, flexible customer-facing analytics

Data Sources

Security

Cloud

Demo

Watch 5-minute demo

Embedded analytics SDK

White-label analytics

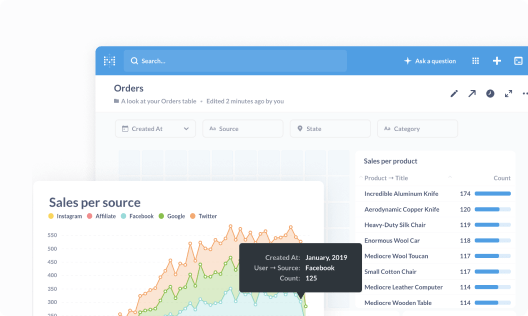

Dashboards and reporting

Drill-through

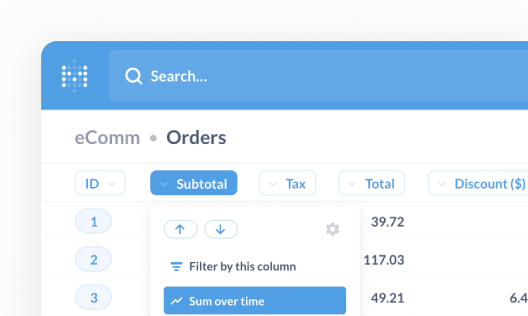

Query builder

SQL editor

Semantic layer

Permissions

CSV upload

Data segregation

Usage analytics

Metabot AI

Updates

What’s new

Roadmap