Product

Features

Docs

Resources

Blog

News, updates, and ideas

Events

Join a live event or watch on demand

Customers

Real companies, real data, real stories

Discussion

Share and connect with other users

Metabase Experts

Find an expert partner

Community Stories

Practical advice from our community

Recent Blog Posts

Product Hunt AMA Recap: embedding, open source success, and more

Introducing Metabase's new Embedded Analytics SDK for React

Metabase alternatives: peeking at other Business Intelligence tools

Maps data visualizations: best practices

How to visualize time-series data: best practices

Product Hunt AMA Recap: embedding, open source success, and more

Introducing Metabase's new Embedded Analytics SDK for React

Metabase alternatives: peeking at other Business Intelligence tools

Maps data visualizations: best practices

How to visualize time-series data: best practices

Business Intelligence

Self-service analytics for your team

Embedded Analytics

Fast, flexible customer-facing analytics

Data Sources

Security

Cloud

Demo

Watch 5-minute demo

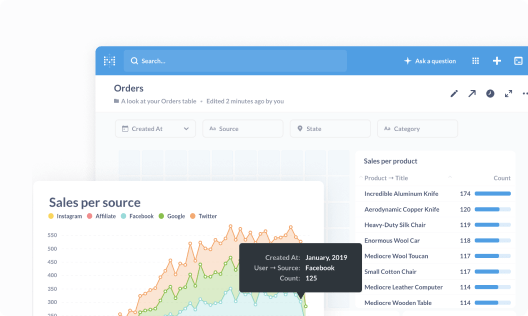

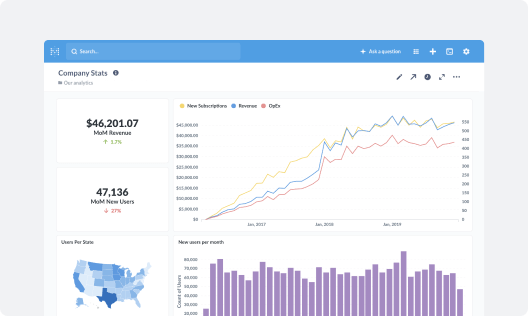

Analytics dashboards

Drill-through

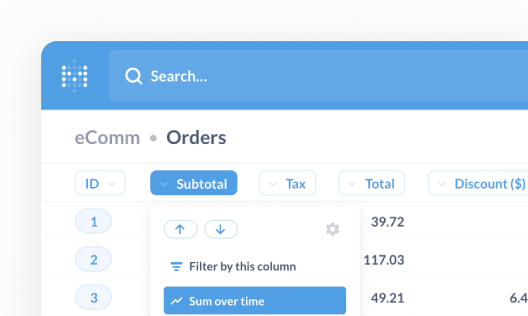

Query builder

SQL editor

Models

Permissions

CSV upload

Sandboxing

Usage analytics

Collections

Updates

What’s new

Roadmap